The Context-Aware RAG Framework

The Context Journey

Every Embedding Tells a Story

Because context isn't optional—it's everything

One-Command Deploy

Get production-ready RAG platform running with just docker compose up. Zero configuration required.

Anthropic's Contextual Retrieval

Only open-source framework implementing the full contextual retrieval methodology that powers Claude's superior RAG capabilities - delivering 35-67% performance improvement.

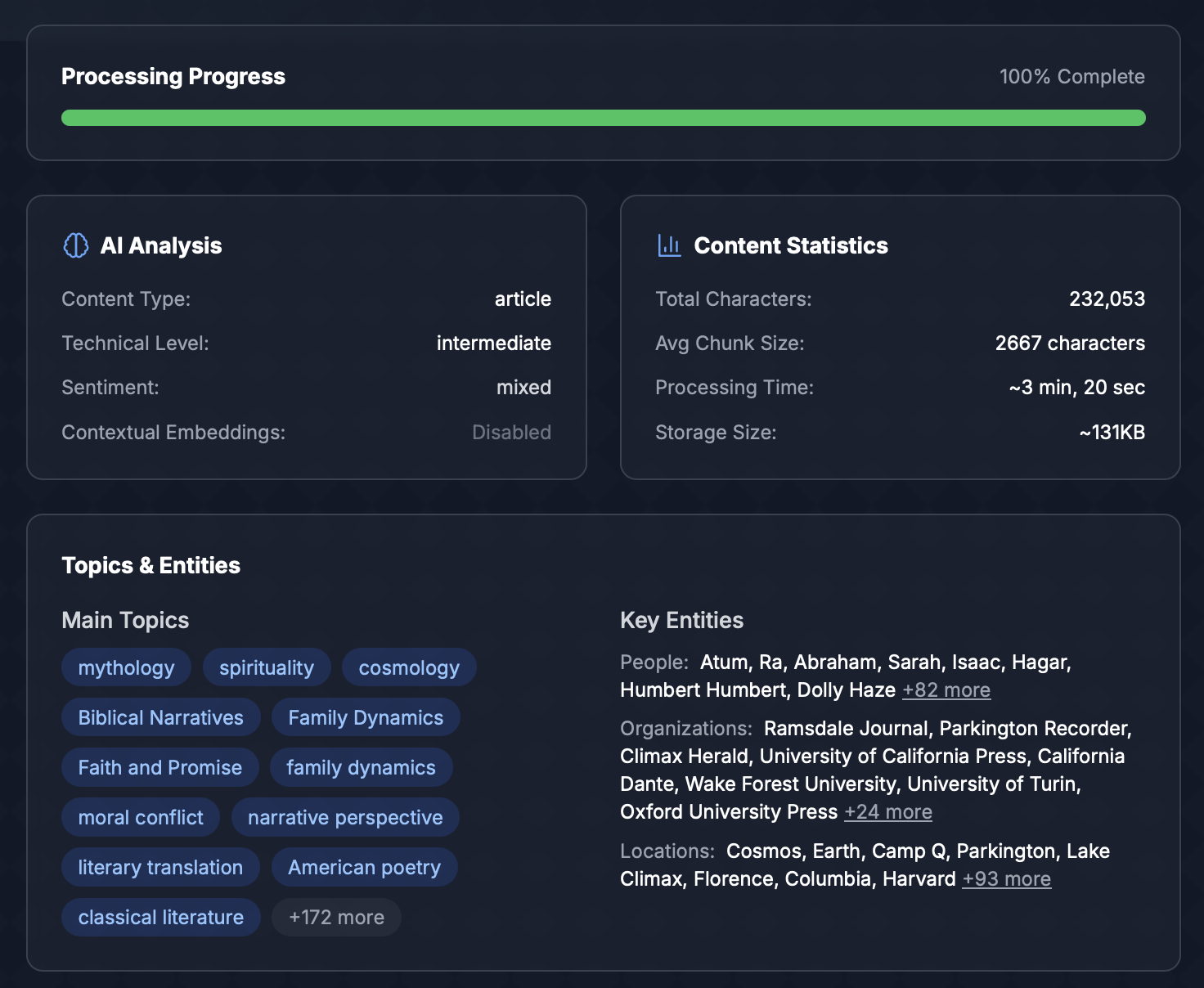

Dual-Stage AI Analysis

Unique two-stage pipeline: Stage 1 extracts 11+ metadata dimensions (sentiment, entities, technical level), Stage 2 adds contextual descriptions before embedding - no other framework does both.

JavaScript-First RAG

Finally, enterprise-grade RAG for the Node.js ecosystem. While LangChain and LlamaIndex focus on Python, AutoLlama brings cutting-edge retrieval to JavaScript developers.

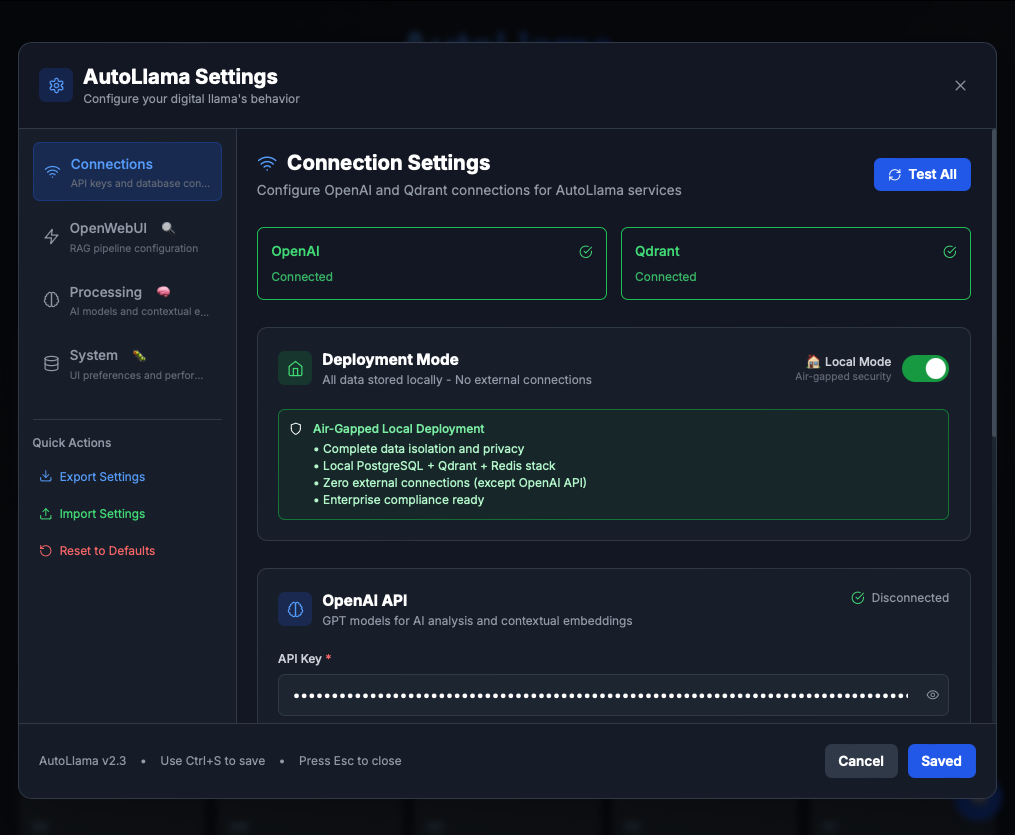

Air-Gapped Enterprise

Complete data sovereignty with v2.3.4 Pure Local Mode. Toggle between local air-gapped deployment and cloud services with one click. Enterprise compliance ready: SOC 2, GDPR, HIPAA, ISO 27001.

Actually Open Source

No hidden costs, no API limits, no vendor lock-in. Full control over your data with local deployment options. Beat commercial solutions like Vectara and Azure AI Search without the enterprise price tag.

Watch Context Come Alive

See the same query fail in standard RAG and succeed in AutoLlama—the difference is context

❌ Before: Original Chunk

✅ After: AI Enhanced

🎯 Contextual Enhancement Value

The enhanced version combines the original chunk with document-aware context, enabling the AI to understand how this specific section relates to the broader document. This results in significantly more accurate semantic search and retrieval compared to traditional RAG systems that embed chunks in isolation.

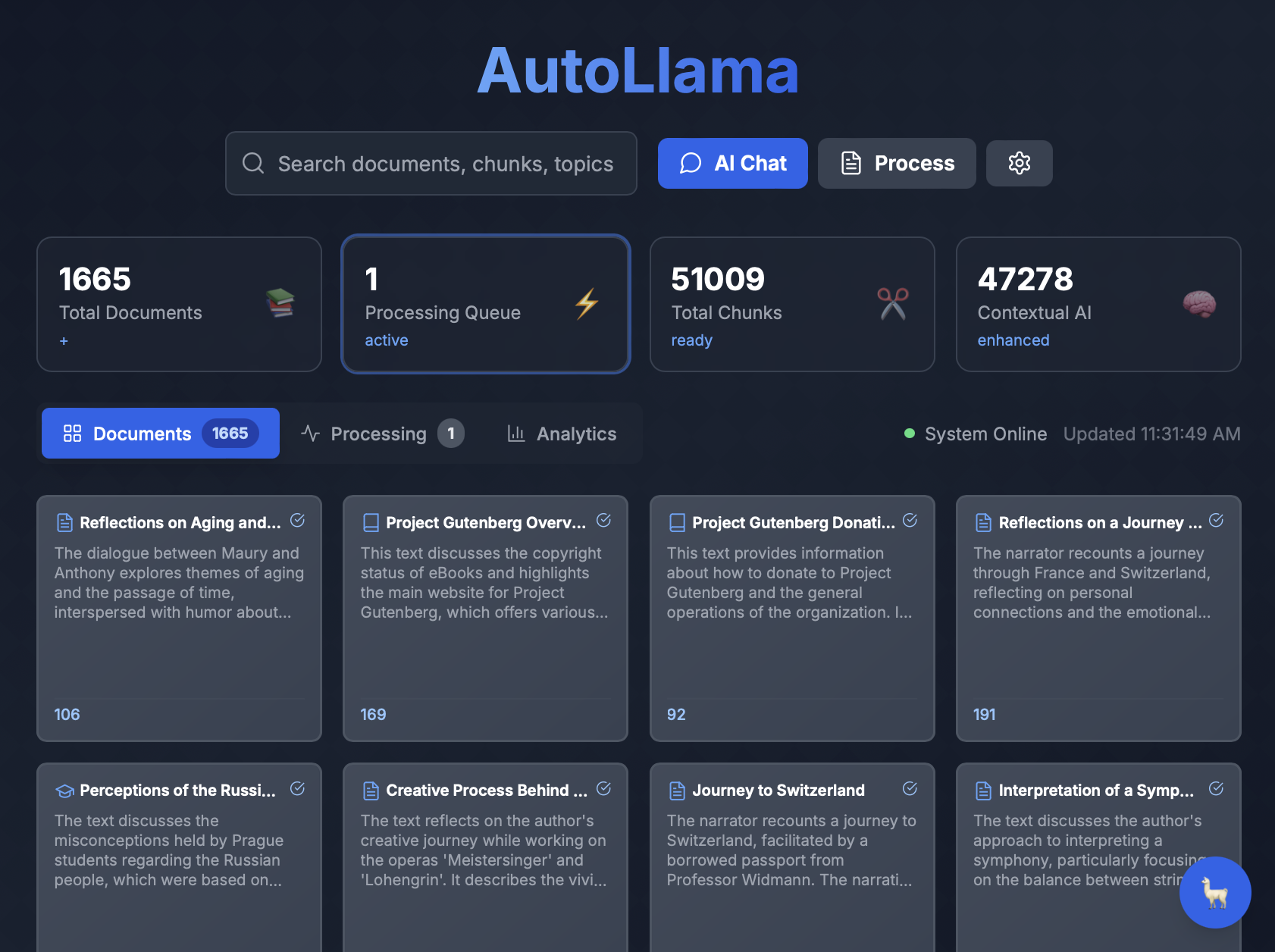

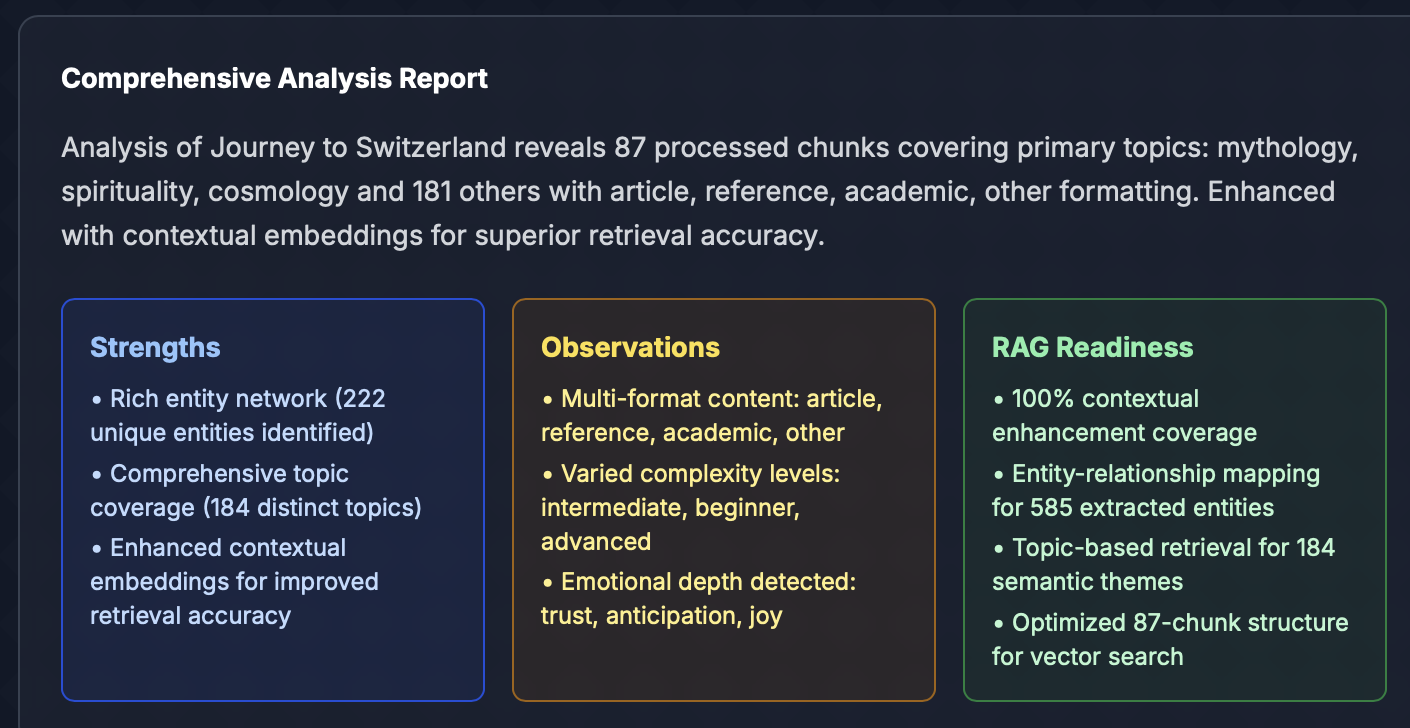

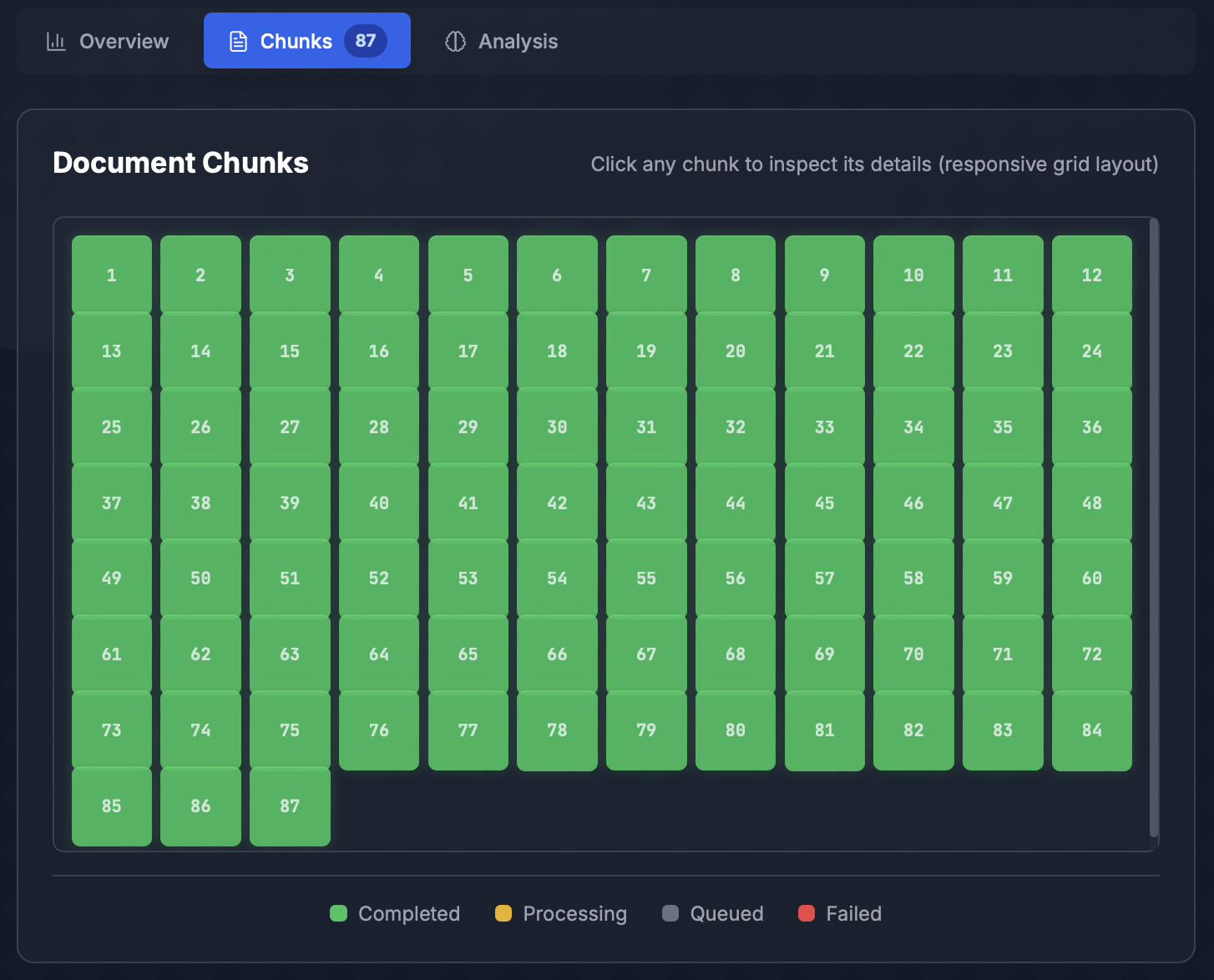

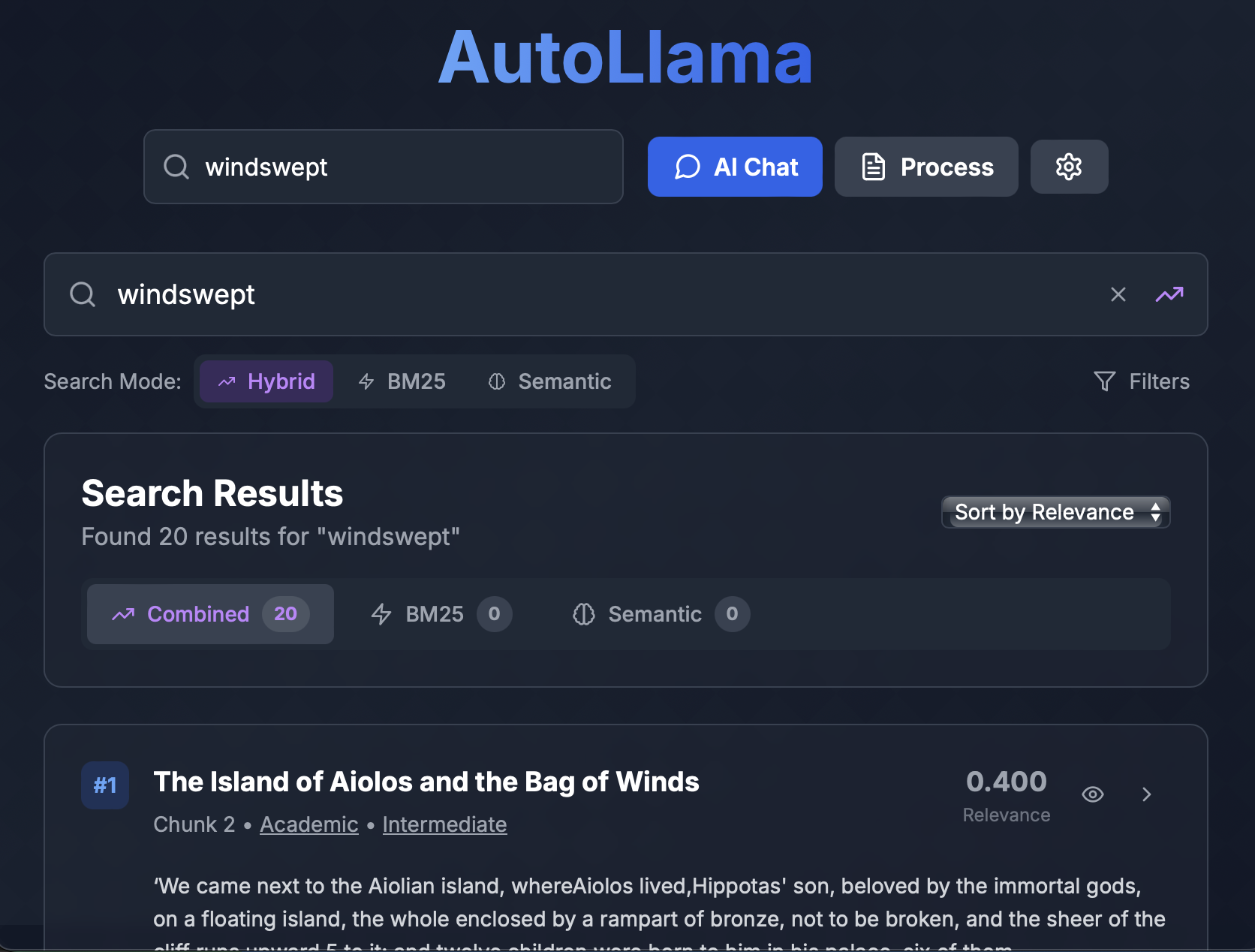

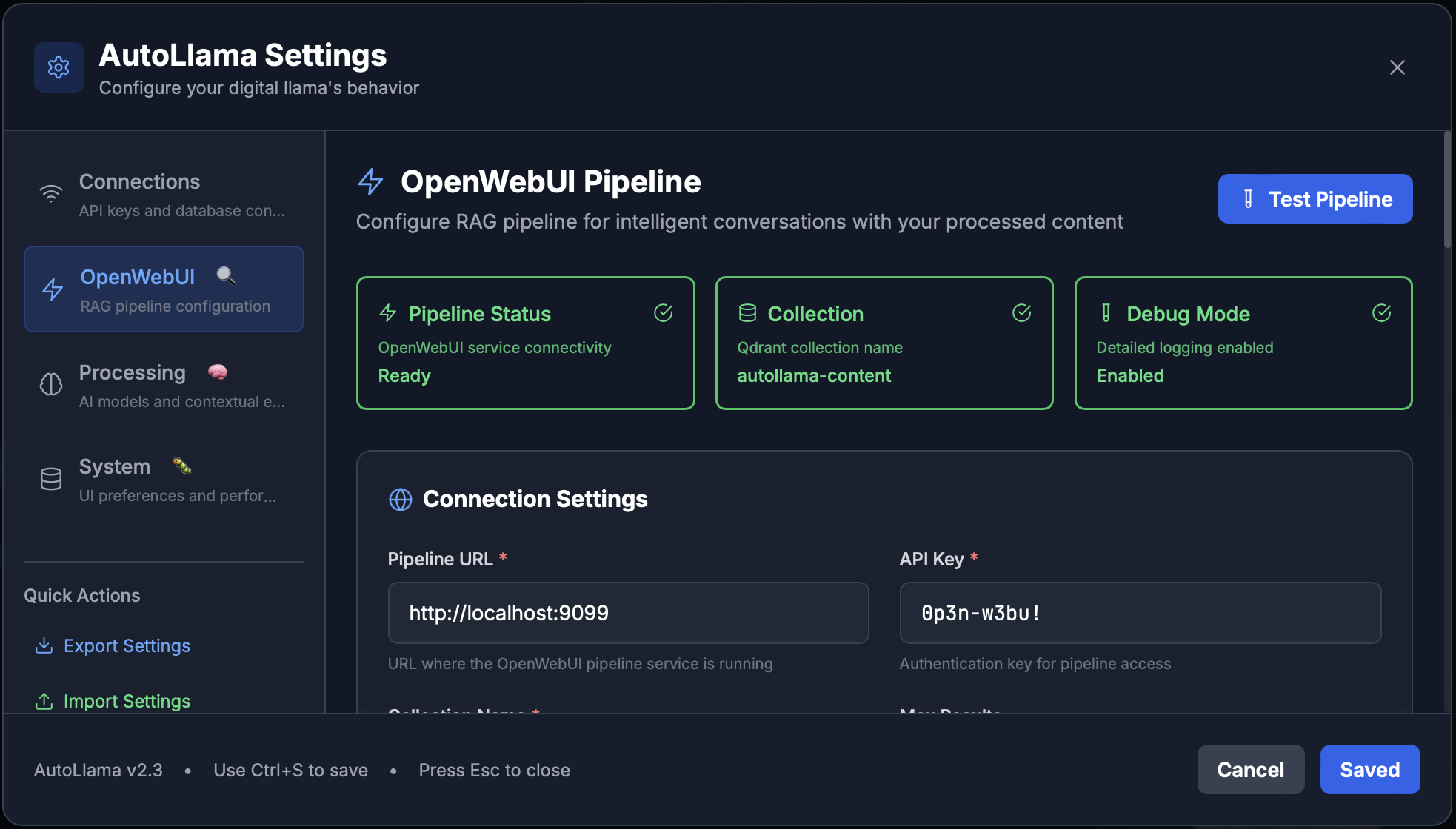

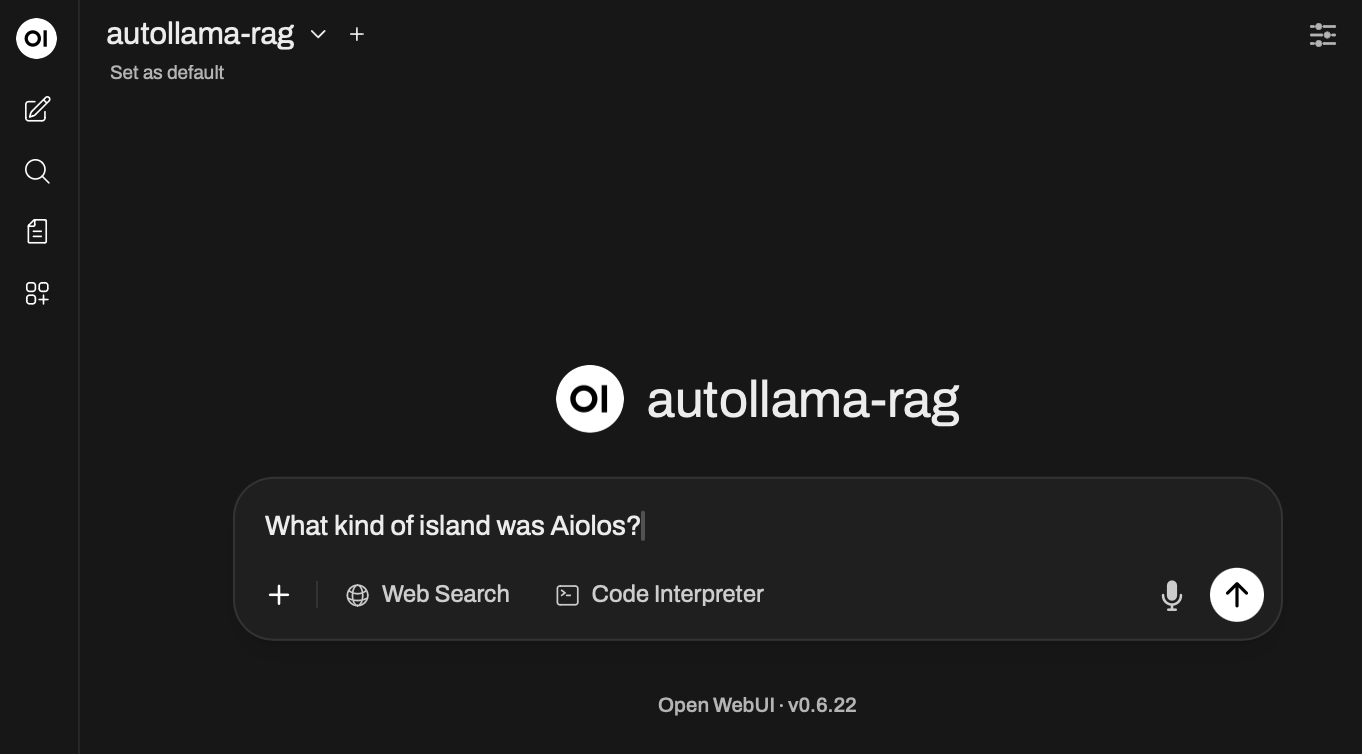

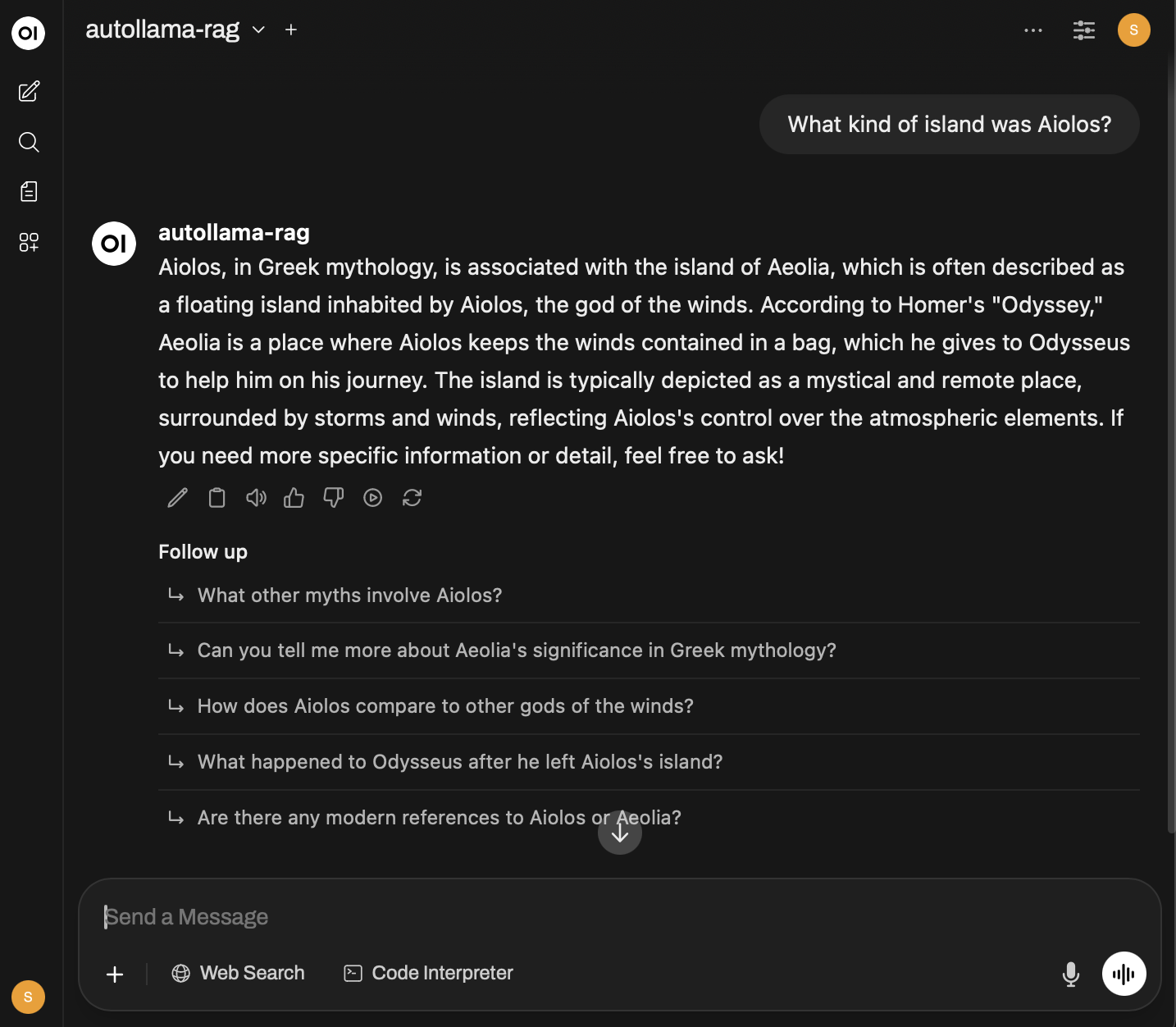

See AutoLlama in Action

Real screenshots from the AutoLlama platform—experience the interface that makes contextual RAG effortless

What You're Seeing

Stop Losing the Plot

Your documents have narratives. Your contracts have dependencies. Your research has connections. AutoLlama preserves them all.

LangChain

105K GitHub stars

Basic metadata extraction

Complex orchestration

LlamaIndex

40K GitHub stars

Advanced indexing

Python ecosystem

AutoLlama

Contextual retrieval ✓

11+ metadata dimensions ✓

JavaScript-first ✓

Commercial Solutions

Vectara: $500-10K/mo

Azure AI: $50-5K/mo

AWS Bedrock: Pay-per-use

"For developers who need production-ready RAG that actually works, AutoLlama is the only open-source framework that combines Anthropic's contextual retrieval with comprehensive content analysis."

Why We Built The Context-Aware RAG Framework

We believe documents are more than bags of words. They're structured thoughts, connected ideas, and contextual relationships.

We believe that when you ask a question, you deserve an answer that understands not just the words, but the story they're part of.

We believe context isn't a nice-to-have—it's the difference between information and understanding.